Integrating Sound in Living Architecture Systems (2018)

Poul Holleman

category:

Journal

date:

August 2018

authors:

Poul Holleman, Salvador Breed, Paul Oomen

published by:

Spatial Sound Institute, Living Architecture Systems Group

Journal

date:

August 2018

authors:

Poul Holleman, Salvador Breed, Paul Oomen

published by:

Spatial Sound Institute, Living Architecture Systems Group

The paper elaborates upon the results of collaboration between the Spatial Sound Institute and Living Architecture Systems Group throughout 2017-2018. Rather than adding layers, it is argued that spatial sound interweaves meaningful fabric to sculptural form and living architecture. It is able to embed an architectural design within a sonic field (exterior), or spatial sound can be embedded itself inside sculptural objects (interior).

Introduction

Sound is an essential medium in understanding space, expressing emotions, and abstracting organic and artificial phenomena. The presence of a physical sculpture demands spatially and physically defined relations with sound. The in-depth collaboration with Beesley et al. has challenged 4DSOUND to extend the control of sound beyond an empty spatial canvas that hosts virtual sound objects, to a world where the virtual is seamlessly integrated with the actual.

The 4DSOUND paradigm essentially regards sound by its sculptural and architectural qualities. Sounds are objects, that are sculpted by giving it dimensions, construe sub particles, and apply properties through sound design. These objects are subsequently positioned in a (virtually) infinite space and can move following particular trajectories or according to a certain behaviour. Sound can come intimately close as if almost touching the body, or appear on a distant horizon, moving like a flock of birds or straight and fast like cars on a highway. On top of that, a virtual environment responds to the presence of these sounds with acoustic reflections, modelled according to being in a small room or a vast space. These environments can take surreal forms as well, creating otherworldly textures and gestures that evoke imagination beyond the recognisable.

4DSOUND is an instrument, a set of tools that enable to compose and perform with spatial sound intuitively and in great sculptural detail. In the context of living architecture systems, the challenge has been to not only create a virtual sound world that can be explored, but to embed this sound world in sculptural material, and give actual objects a voice to further them being meaningful actors in a designed environment. Three R&D topics have been central in realising this:

1. Distinguishing speaker constellations in type and function to gain control over precise sound projection

2. Introducing irregular speaker configurations to cater to irregular sculpture

3. Making virtual sound objects, actuators, and sensor interfaces part of the same virtual space, and to enable integrated interaction.

Exterior and Interior

To embed a distributed network of speakers within sculptures, we needed to redefine the 4DSOUND panning algorithms in order to expand the possibilities beyond sound spatialisation within a singular equal spaced grid. For this we conceptualised a distinction between exterior and interior speakers.

The exterior field can be considered similar to the way 4DSOUND setups have been used regularly up until today. It covers the omnidirectional field of the actual space, and virtually extends this field beyond the actual space to infinity. The exterior speakers project what is surrounding the sculpture, for both near field and in the distance, above and below, moving around and through the listeners. As such 4DSOUND equal distribution grids provide a social listening area not restricted to a sweet spot (P. Oomen, P. Holleman, L. de Klerk: A New Approach to Spatial Sound Reproduction and Synthesis; Living Architecture Systems Symposium: White Papers, 2016), it is up to the listeners to create any experiential perspective by moving around.

The interior speakers voice the sculpture itself. The amount of discrete actual objects these interior speakers represent is an artistic decision, and a matter of instrumental design. In general, the spatial relations between the sculptural objects dictate which interior speaker constellations can be distinguished and processed accordingly by the 4DSOUND engine. In the projects realised to date, we have managed to generate sounds that behave like a dense crackling neural network, but also the presences of smaller objects within the sculpture - like electronic birds in a tree.

For the most part, sounds in either one or both of the fields shared the same extensive set of controllable parameters that allow to sculpt the movement, physics and perception of the sounds in space. In terms of control, this added sonic versatility to address sounds to the exterior field or interior fields of different sculptural entities entails only a minor expansion in parameters. A simple matrix interface enables to route sound sources to the desired speaker constellations. Sounds that live within the one sculptural object, can easily be transferred to, or spread over other objects. Also indirect connections are possible, such as adding reflections in the exterior field as a response to sounds that originate in the interior, potentially creating naturalistic echoes that seem to come of walls bigger and further than the actual space one is in.

For the installation ASTROCYTE we introduced the ‘Sphere Engine’, a prototype application that complemented the regular 4DSOUND Engine. The Sphere Engine was designed to drive twelve speakers inside the Large Sphere that formed the central protagonist of the sculpture. The speakers were more or less equally spread over the core semi sphere, which was raised slightly tilted above the heads of the listeners, resulting in a defined focal listening point within the spatially expanded sculpture. In combination with the gentle directional quality of the speakers, and granular sound design, this enforced the sensation of proximity, and feeling of intimacy with this large, radiating object.

Image: the Large Sphere of ASTROCYTE, the inner semi sphere contains 12 speakers

Image: the Large Sphere of ASTROCYTE, the inner semi sphere contains 12 speakersPhoto courtesy by Philip Beesley

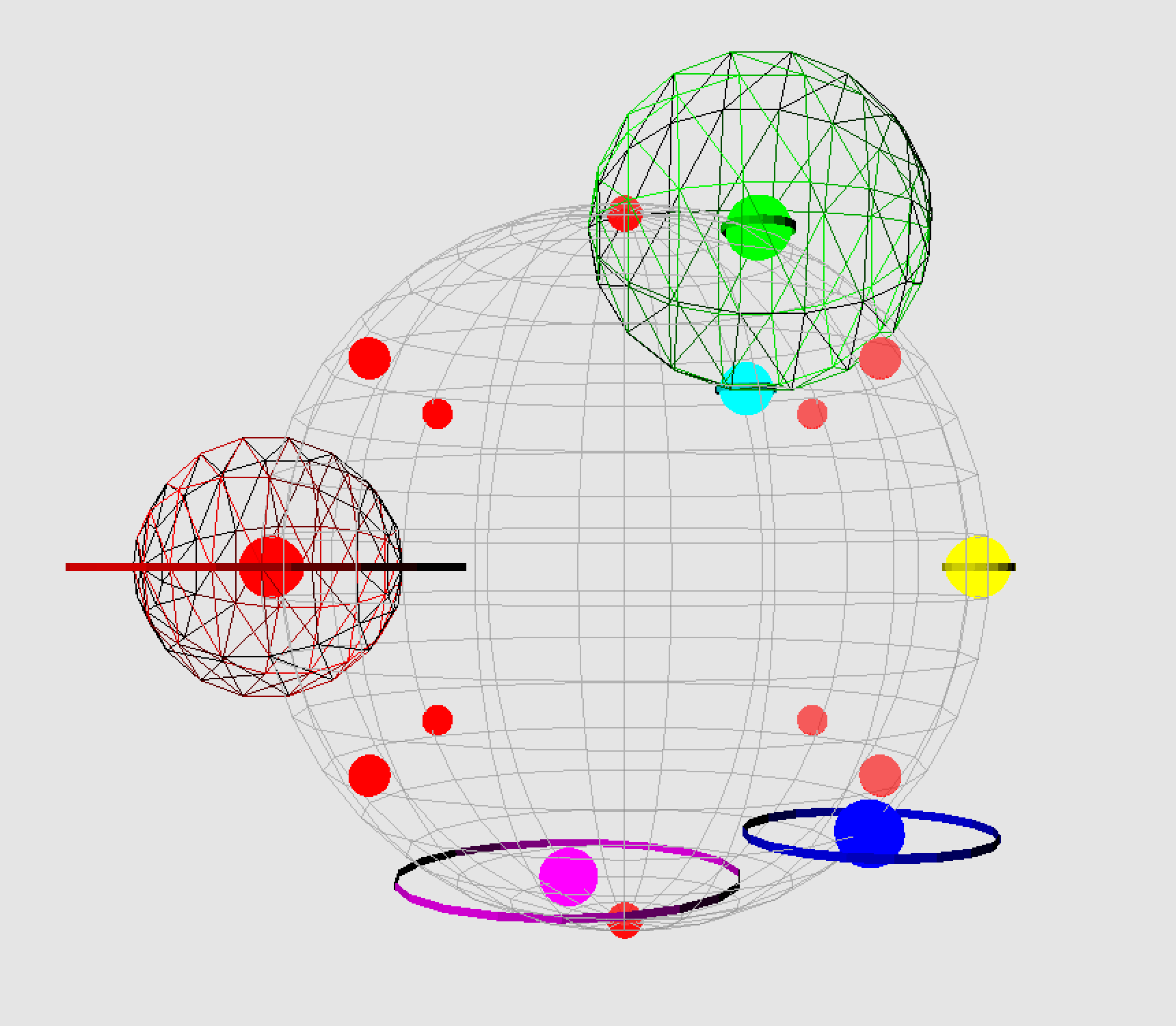

Image: 3D visualisation of the Sphere Engine spatialisation developed for ASTROCYTE

Image: 3D visualisation of the Sphere Engine spatialisation developed for ASTROCYTEprojects:

ASTROCYTE DXEDIT, Toronto, Canada

Fall 2017

AMATRIA Indiana University Bloomington, Indiana, USA Spring 2018

NOOSPHERE | AEGIS Royal Ontario Museum

Toronto, Canada

Summer 2018

ASTROCYTE DXEDIT, Toronto, Canada

Fall 2017

AMATRIA Indiana University Bloomington, Indiana, USA Spring 2018

NOOSPHERE | AEGIS Royal Ontario Museum

Toronto, Canada

Summer 2018

We found that the results of simple technical sound tests - such as exposure to continuous pink noise and a variety of waveform pulses to different spatial projection onto the sphere - delivered a broad palette of characters and abstract associations. Such as; synchronised pulses accentuating the shape of the sphere as a whole, where diffracted sounds connects more to shifting textures of its parts and the granularity of inner processes within the sphere. Because of the speaker density, the perception of small, moving sound sources was extremely clear. Altogether, the sphere provided a tool for ‘sonic painting’ with a broad palette onto a tightly focused frame.

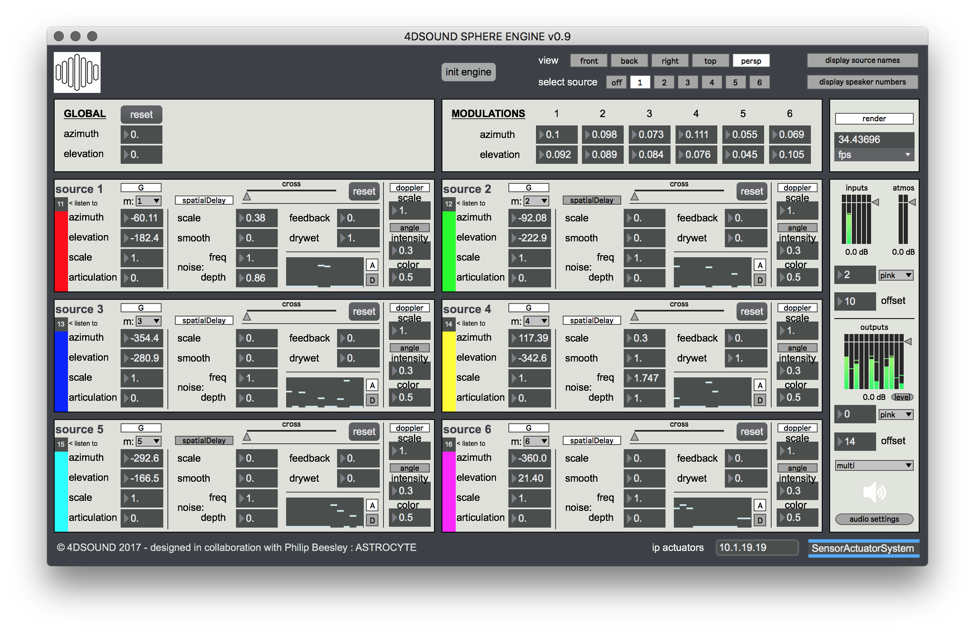

![]() Image: user interface of the Sphere Engine developed for ASTROCYTE

Image: user interface of the Sphere Engine developed for ASTROCYTE

Irregular Speaker Configurations

4d.pan, the proprietary panning algorithm developed by 4DSOUND, was initially limited to symmetrical rectangular speaker grids. To provide asymmetrical sculptures with varying densities of interior speakers, we expanded the 4d.pan algorithm to support irregular setups of varying sizes and shapes. The rule of thumb remains to strive for setting up field speakers as equally spaced as possible, in order to assure balanced sound projection and refined continuous spatial definition in all directions. However, for the sake of supporting any artistic or acoustic reasons to create more irregular grids, all configurations are allowed. We found that combining an equal spaced exterior field with a sculpturally determined irregularly spaced interior field delivers a convincing and powerful sound space with explicit control over sonic presence and attention.

A given constellation of speakers can be divided into subsets. This allows extremely detailed control in spatial sound design as it creates different speaker groups (areas) for sound sources to exist within. The obvious division that was introduced above is the separation of an exterior and interior. However, the structure of the sculpture invites to mimic its objects in dividing the interior speakers. This results in an almost evident design where the sonic and material interpretation of the sculpture dictates the speaker configuration, instead of the other way around. We are excited that backend complexities realise an elegant frontend for intuitive system handling and sound design.

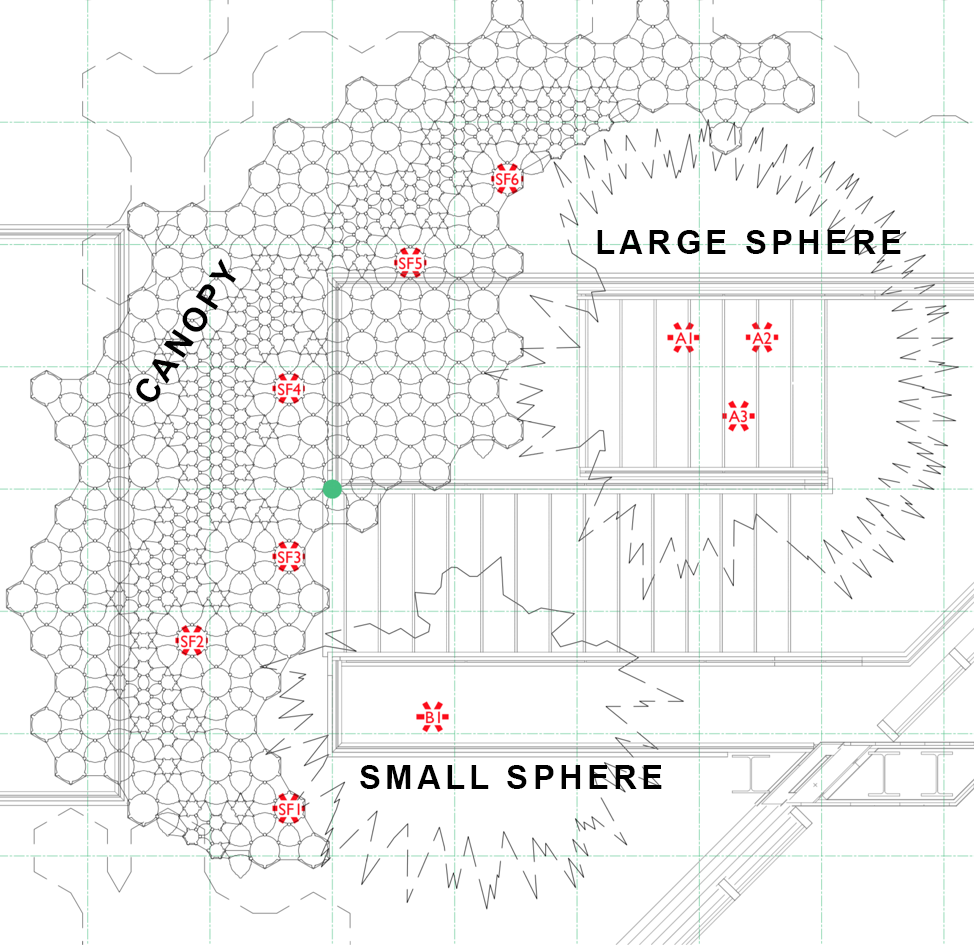

For the permanent environment AMATRIA, we followed up on ASTROCYTE by catering to 10 speakers distributed through the larger part of the sculpture. We identified the Large Sphere (speakers A1-A3), the Small Sphere (speaker B1), and the Canopy (speakers SF1-SF6). By defining three sub constellations we were able to develop the spatial sound design around two actor objects (the Spheres), and a more diffracted area (the Canopy).

![]() Image: irregular speaker configuration of AMATRIA

Image: irregular speaker configuration of AMATRIA

The Large Sphere is the protagonist, and its three speakers, as opposed to the Small Sphere equipped with one speaker, provide a focussed but spatially rich presence. The differentiation that is made possible by using a triad configuration creates a spatial depth and perception of dimensionality of the sounds projected onto the field. The single speaker in the Small Sphere is timid, and within the context of its neighbours a clear contrast in sonic presence, but nevertheless of distinctly character there in its own right as a defined point in space, and of distinctly different character than the presence of sounds on the Large Sphere.

In the spatial sound design we experimented with independent roles for each character. For example ‘breathing’ spheres with subtle reflections on the canopy. Other scenes resembled textures such as dense fog, or minimal raindrops and shattering glass. The flexibility of the instrument filling the entire space or carefully occupying specific areas proved to be essential in crafting the meeting point of sculpture and sound.

![]() Image: AMATRIA installed at Indiana University, Bloomington USA.

Image: AMATRIA installed at Indiana University, Bloomington USA.

Image: user interface of the Sphere Engine developed for ASTROCYTE

Image: user interface of the Sphere Engine developed for ASTROCYTEIrregular Speaker Configurations

4d.pan, the proprietary panning algorithm developed by 4DSOUND, was initially limited to symmetrical rectangular speaker grids. To provide asymmetrical sculptures with varying densities of interior speakers, we expanded the 4d.pan algorithm to support irregular setups of varying sizes and shapes. The rule of thumb remains to strive for setting up field speakers as equally spaced as possible, in order to assure balanced sound projection and refined continuous spatial definition in all directions. However, for the sake of supporting any artistic or acoustic reasons to create more irregular grids, all configurations are allowed. We found that combining an equal spaced exterior field with a sculpturally determined irregularly spaced interior field delivers a convincing and powerful sound space with explicit control over sonic presence and attention.

A given constellation of speakers can be divided into subsets. This allows extremely detailed control in spatial sound design as it creates different speaker groups (areas) for sound sources to exist within. The obvious division that was introduced above is the separation of an exterior and interior. However, the structure of the sculpture invites to mimic its objects in dividing the interior speakers. This results in an almost evident design where the sonic and material interpretation of the sculpture dictates the speaker configuration, instead of the other way around. We are excited that backend complexities realise an elegant frontend for intuitive system handling and sound design.

For the permanent environment AMATRIA, we followed up on ASTROCYTE by catering to 10 speakers distributed through the larger part of the sculpture. We identified the Large Sphere (speakers A1-A3), the Small Sphere (speaker B1), and the Canopy (speakers SF1-SF6). By defining three sub constellations we were able to develop the spatial sound design around two actor objects (the Spheres), and a more diffracted area (the Canopy).

Image: irregular speaker configuration of AMATRIA

Image: irregular speaker configuration of AMATRIAThe Large Sphere is the protagonist, and its three speakers, as opposed to the Small Sphere equipped with one speaker, provide a focussed but spatially rich presence. The differentiation that is made possible by using a triad configuration creates a spatial depth and perception of dimensionality of the sounds projected onto the field. The single speaker in the Small Sphere is timid, and within the context of its neighbours a clear contrast in sonic presence, but nevertheless of distinctly character there in its own right as a defined point in space, and of distinctly different character than the presence of sounds on the Large Sphere.

In the spatial sound design we experimented with independent roles for each character. For example ‘breathing’ spheres with subtle reflections on the canopy. Other scenes resembled textures such as dense fog, or minimal raindrops and shattering glass. The flexibility of the instrument filling the entire space or carefully occupying specific areas proved to be essential in crafting the meeting point of sculpture and sound.

Image: AMATRIA installed at Indiana University, Bloomington USA.

Image: AMATRIA installed at Indiana University, Bloomington USA.Shared Worlds:

Merging the Virtual and the Actual

Another important R&D subject has been the integration of sound spatialisation with other kinds of actuators: granular light, kinetic mechanisms and sensor interfaces. To create meaningful integrated interaction we developed the prototype Distance Based Interaction Engine (DBIE) in collaboration with Philip Beesley Architecture Inc. members Rob Gorbet & Adam Francey. The DBIE is a simplified interpretation of the spatialisation algorithms that already exist within 4DSOUND. In the 4DSOUND Engine, spatial properties of virtual sound objects are mapped to speaker amplitudes and audio signal processing (spatial synthesis). In the DBIE, basic spatial properties of an ‘Excitor Object’ (position, size, and force) are mapped to lights, kinetic objects, or any type of actuator.

The basic principle of Distance Based Interaction is that the intensity of an actuator (e.g. the brightness of a light, or the speed of a motor) is determined by the distance of a virtual object. This means every actuator holds position properties that match their actual relative locations within the sculpture. The virtual Excitor Objects also live inside this 3D map, with their presence exciting the actuators by moving closer and further away. An excitor can be an infinitely small point, or have any spherical size. An increase in scale means it covers more virtual space and effectively comes closer to more actuators at once, resulting in more actuators being excited. The final parameter is Force, a multiplier of Distance Intensity acting as the upper limit of excitation. With scale and force combined, one can for example create a large but weak excitor, or a small and strong one.

Sensor systems are integrated in the same Cartesian space as the sound objects, their position in an infinite continuum of space defined by bipolar XYZ-positions (P. Oomen, P. Holleman, L. de Klerk: A New Approach to Spatial Sound Reproduction and Synthesis; Living Architecture Systems Symposium: White Papers, 2016). Infrared modules register the global presence of visitors and their positions within the sculpture, as well as hand gestures by the visitors, which the sensors invite to by their design. Virtual sound objects and excitors can react spatially coherent and immediately in a reflex. Infrared triggers can attract these objects, or be repulsive. We found there is a subtle balance between direct basic responsiveness (e.g. triggering short samples on the location of the sensor) and more complex movements that evolve over time (e.g. ripple effects in the surrounding actuators, or the progression of the sound composition on a higher level). The correlation of sound events in space and actuation by movement and behaviour strongly enhances the spatial relationship between the participating visitor and the sculpture.

In this setup, virtual sound objects, virtual excitors, speakers, actuators, and sensors all share the same world-space, and are able to interact accordingly. This produces a versatile and intuitive interface to design coherent movements and behaviour. Background behaviour can be small excitors, moving fast and slow, emerging and vanishing like snowflakes or a soft breeze through the woods. Bigger excitors could be phantom creatures, or abstract forces of energy beating through space. The technical essence is that single indexed actuators are not anymore to be considered in artistic design, instead, the interface is elevated to a level of control where conceptual translation becomes more immediate and inspiring.

Image: Excitor Objects and their distance-based relationships to actuators present in a sculptural environment

The first implementation of the DBIE was realised for NOOSPHERE | AEGIS at the Royal Ontario Museum, Toronto. Background behaviour is generated by small, semi-random excitations through the sculpture. In the large sphere of NOOSPHERE an excitor is active, with transforming size, force, and trajectory. They mark the first modest but profound steps in unifying algorithms for behaviour in sound, light, and movement, and in interaction with human input through sensor interfaces.

Image: human input as excitation for kinetic sound, light and movement within

Image: human input as excitation for kinetic sound, light and movement withinNOOSPHERE

Future Research

In future research we will refine panning algorithms, explore the characteristics of different kinds and combinations of speakers, and continue stretching the flexibility of sound spatialisation using varied speaker configurations. The divide between exterior and interior sound may be further contrasted to enhance depth of field, but at the same time invites to explore coherence between the perception of the diffusion field and focused objects, emerging from the same technological principles in hardware and software.

A shared origin will be further developed to involve all technological disciplines within the same spatial interaction paradigm, embedded in the same software reality. An important goal is to find the right balance between utilising processing power of a personal computer, and performing algorithms on the distributed network of microprocessors that are not necessarily aware of structural properties, but act more like individual agents within the network.

Other objectives are designing an offline prototyping environment in order to develop interactive algorithms and behaviours independently from a hardware testbed. Since all virtual properties will share the same world, these should be included in a shared virtual environment. An extensive 3D visualisation of all activity will complement the possibilities to exploit the spatial worlds as an instrument and provide insightful feedback of all relevant processes. This will allow detailed preparatory work, stimulate creative design of the worlds as a whole, and increase efficiency during the final stages of installing sculptures on location.

—

Related: